Lecture 5: DISCOVERING NUTRITIONAL DEFICIENCY DISEASES

(Stories in the History of Medicine)

AdamBlatner, M.D.

Lecture given February, 2009; Re-posted on website August 4, 2010

This is the fifth in a series of presentations given to the February-March, 2009 session of our local lifelong learning program, Senior University Georgetown.)

Other lectures in this series include: 1. Introduction and Germ Theory, plus supplementary webpages on a brief overview of the history of medicine before 1500,

a brief overview of the history of medicine after 1500, and the history of microscopy.

2: Contagion, Infection, Antisepsis ; 3: The Early History of Immunology ; 4. The Discovery of Anesthesia. .. 5. This webpage (title above), and:

6: Hygiene: Cholera, Hookworm & Sanitation. 6a. Dental Hygiene and Plaque Control (Flossing)

Today we'll address the history of nutritional deficiencies. A theme here is the opening of previously unrecognized horizons. People didn't know there were subtle substances in food. We came to realize that there are stars beyond what we can see with the naked eye and forms of life too small, and similarly, there were many other subtleties that await technological enhancements to appreciate. In the field of nutrition, this involves the continuing development of equipment for testing or assaying tiny amounts of chemicals.

Many of the breakthroughs in medicine were made not by physicians, but by chemists and biochemists. (Biochemistry as a field emerged only in the early 1800s when it became apparent that the chemistry of living matter was more complicated and based mainly on the properties of the carbon atom.) Before that, though, what some pioneers did was more a job for Sherlock Holmes, a process of deduction, experiment, but not fully understanding why or how it works.

The topics to be addressed today include basic protein deficiency in starvation; iron deficiency anemia; scruvy; iodine-deficiency and subsequent low thyroid functin; rickets from vitamin D deficiency; beriberi from Vitamin B1 deficiency; and pellagra from niacin deficiency---and some of the stories of the discovery of their existence. It should be noted thought that new subtle nutrients are still being discovered, or research is revealing that more or less of this or that is optimal. For example, only about 17 years ago evidence accumulated that mothers need increased folic acid to reduce the incidence of certain birth defects such as spina bifida. Now it's being added to foods!

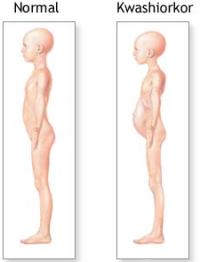

Our first condition to be noted is that of kwashiorkor, a disease of

protein deficiency. Generally, this is associated with many other types

of nutritional deficiency, but the lack of protein has its own

consequences. The muscles shrink, the hair develops a reddish tint, the

liver swells

and its fat content grows to compensate for the lack of protein, which

gives the impression of a fat belly---but that is not fat, it

represents a liver that is diseased and not functioning properly.

One cause of kwashiorkor is of course simple famine, one of the four horsemen of the apocalypse. Let's note a point here, though: A person can become malnourished even if they are getting enough general foodstuffs in the form of simple calories. This is because there are three main categories of food: carbohydrates, fats and proteins. Carbohdrates are mainly used for basic calories, for fuel for the cells to live. Fats are used also mainly for fuel, but also some of its components are important as building blocks; Proteins are broken down into amino acids that are mainly used for building blocks in growing new tissues or replacing old cells. We need all three types.

It's possible to have a diet that is made up mainly of calories, interestingly enough. The second is used both for fuel , the fuel and the building blocks. We need both. In the picture of the baby on the right, it was probably fed on corn mush or what some might call "empty calories." They're not empty in the sense of providing nutrition as fuel, but it doesn't adequately provide the variety of proteins and other nutrients to keep up health. This baby also has kwashiorkor, even though she isn't starving. Sadly, there are babies in poor families (and even some pampered babies in rich families who get fine sweets!) who don't get a truly balanced diet and get sick as a result!

Thus, being a little fat in cheeks and elsewhere isn't a reliable guide. This baby may have been fed mainly on corn mush. The raw creases at the edges of the mouth is called cheilosis. Other signs include the reddish hair and empty vision. Protein deficiency, kwashiorkor, leads to mild mental retardation, because the brain lacks the nutrients to work with. Thus part of prevention is education of parents about good nutrition, and some teen parents aren't bothering to learn what they need to about child care---their babies are thus a population at risk. Indeed, one of the points of this and the next lecture is an appreciation of the nature of public health as a preventive force in society.

In the last several centuries it seemed to be profitable to

slave-owners or managers of institutions to cut costs and maximize

profit by feeding their charges with the cheapest foods. As a result,

children, the insane, prisoners, slaves, often soldiers and sailors

too, received the most meagre rations compatible with life.

The picture on the left shows native Indian slaves in 17th century Florida planting and hoeing. (Yes, the Spanish Conquistadores enslaved the aboriginal population! They felt entitled to do so because they were possessors of truth and goodness and the Indians were heathens who, by not being baptized, didn't merit the privileges of full humanity---i.e., it was okay to make them into slaves.) Whereas the Indians ate a varied diet based on a hunter-gatherer cultural practice, as slaves their diet became mainly maize corn, which lacks a number of nutrients---iron being one of them.

As a result, children struggle with chronic iron deficiency. Iron is needed to build blood cells, and if there isn't enough in the diet, the body works overtime. The bone marrow where blood cells are manufactured expands. For example, while the skull doesn't usually have much space for marrow between the outer and inner external plates, in chronic anemia this space expands and the bone becomes strangely porous---a condition known as porotic hyperostosis (bony overgrowth). To the right is a picture of the skull of one of the children of those 17th century Florida Indians, indicating that chronic iron-deficiency anemia had become common.

Iron and Hemoglobin

The mineral iron is quite common, and indeed is one of the main

components of the planet's core, accounting in great part for the

Earth's magnetic field. The iron atom figures in a number of biological

key compounds and enzymes, especially those that derive from the

"porphyrin" molecule shown on the left. (I have always been impressed

with beauty of the form of this molecule!) The iron is the red part

surrounded by the blue nitrogen atoms, and located so that depending on

minute fluctuations of acidity or alkalinity, it tends to either

attract a molecule of oxygen and release carbon-dioxide (as happens in

the slightly less acidic environment of the lungs) or vice versa: In

the slightly more acidic environment of the tissues, where new oxygen

is needed and carbon dioxide is building up, there the hemoglobin

molecule lets go of its loosely bound oxygen and binds instead to

carbon dioxide. Thus, hemoglobin is the key messenger substance of

blood, the blood corpuscle is its package, and iron is its core

component. If there isn't enough iron, the body can't easily build up

the rest of the system, the blood cells become pale---not enough

red-colored molecules of hemoglobin---and the result is anemia.

In anemia, the tissues aren't getting enough oxygen and the person feels bad, tired, and exertion quickly becomes exhausting. Not understanding that slaves and other poorly nourished people really were suffering with a handicap of low blood hemoglobin, their seeming sluggishness was interpreted as laziness, "shiftlessness," and proof that they were unworth of respect. This reinforced the illusions of righteousness of the class of people who exploited their labor. This dynamic still goes on in many parts of the world today.

With anemia, the problem is fatigue. Since no one listened to complaints, the fatigue was interpreted as what? Right, laziness. That proves these people are unworthy, not energetic, and therefore unworthy of moral respect. It’s right that they be treated as less than full persons. No-account, lazy, worthless, blame-worthy—bad, not sick.

The astute 17th century English physician Thomas Sydenham was wary of

many traditional theories and treatments---generally tried to avoid

bleeding, leeches, the use of mercury-containing drugs, and the like.

He found that pale, weak people would often respond well to a tonic

made by steeping iron filings into a kind of tea.

Gradually, though, mainly near the beginning of the 20th century, when a variety of biochemists were exploring all sorts of nutrients, the value of iron became more established as a remedy for anemia. This was found to be fairly common, in fact. Some girls in the 19th century were diagnosed with "chlorosis"---a condition that has not been diagnosed for over eighty years! It is based on the greenish color of chlorine---related also to the green of the biological enzyme chlorophyl (remember those green gums in the 1950s?) . In retrospect, this condition may have been a mixture of psychosomatic symptoms, some anorexia, anemia due to menstruation without a compensatory diet, tight clothing, and so forth. Some of these symptoms may have been overlooked also because bleeding was still a common treatment, and being somewhat anemic was even a little fashionable.

Scurvy: Vitamin C Deficiency

Another condition described centuries ago and not correctly

understood was the disease called scurvy. It was prevalent among a

variety of groups of people who had been restricted to a narrow range

of food, generally not including fresh fruits and vegetables.

Prisoners, slaves, soldiers

on long campaigns, poor people or children living in asylums,

orphanages, or institutions, and especially sailors---all were not

uncommonly afflicted with scurvy. It's been estimated that, reviewing

the losses on returning ships, possibly more than a million sailors

died from scurvy during the thousands of voyages that were undertaken

between 1500 and 1830! Their diet had little that would provide the

proper nutrients, you see: It consisted of salted meats (bacon or

fish), old cheese, dried beans or lentils, occasional oatmeal or other

cereals, hardtack (a dense, dry biscuit), water, beer, or "grog," which

had a higher level of alcohol than beer.

Vitamin C is needed for connective tissues to form with some strength. So one sign is that the skin has little blue-red places where blood leaks out from fragile capillaries, or larger areas of subdermal (under-the-skin) bleeding that looks like a bad bruise. The gums become tender---there's just little resistence to the prevalence of gum disease (see the explanation of that in the next lecture-addendum).

Really, only small amounts of vitamin C are needed, and a deficiency takes months to appear, unless one has already been marginally malnourished. For soldiers and especially sailors on many month-long or longer expeditions, biscuits can give enough calories, but those in themselves are not sufficient. Gradually, they begin to tire easier, and have other symptoms. As with iron deficiency, their upper-class commanders just thought they were lazy. After six months, the deficiencies show: The body’s capacity to resist infection plummets. Folks die from secondary infection, and sometimes from internal bleeding, because Vitamin C helps the tissues be firm. Without it, tiny blood vessels break down and people start to get small bruises spontaneously, bruise easier, and periodontal disease—gums—also bleed and get infected. As teeth have problems, other types of malnutrition also occur.

I recently read a case study about an overly picky and obsessive child — a toddler, actually—who was permitted by his conflict-avoiding parents to restrict his diet. He'd tantrum if pushed to eat beyond his familiar range. As a result, this child developed a variety of symptoms for which he was medically evaluated thoroughly at some of the top hospitals in the area. They came up with a variety of rather rare diagnoses. But no one had taken a careful dietary history until finally someone did and recognized that scurvy should be included in the differential diagnosis. A trial of larger amounts of vitamin C began to reverse his symptoms, and this was then organized into a continuing recovery program that also included psychiatric treatment for his obsessions.

Another case I heard of is that of one of these silicon valley geeks who worked late hours and relied on those cheese and cracker snacks and coffee that you can get from the machines in the break room— and he, too, came down with early symptoms of scurvy—and was misdiagnosed for a time and finally correctly diagnosed. But a few hundred years ago they didn’t know about nutritional deficiencies at all.

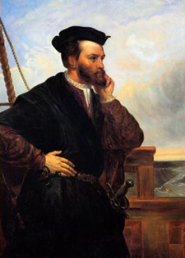

knew of the experience of the explorer Jacques Cartier in northeast Canada in 1535 (picture at right): Many of his men were afflicted with scurvy, and the local

indigenous tribes knew a treatment for this: A tea of pine needles.

It worked because all sorts of fresh fruits and vegetables are

fairly rich in ascorbic acid---though at the time we didn't know what that substance was!.

At any rate, Lind did a number of experiments on sailors with scurvy, trying different diets:

An associated story is that his findings were not immediately accepted,

and indeed, were not really implemented until over forty years later,

when the Admiralty made a rule that all sailors would henceforth have

citrus fruits included in their diets---whence comes the nickname for

English sailors as "limeys" ! This shift may well have had a positive

impact on the status of the naval forces that struggled with Napoleon's

navy at the battle of Trafalgar and in other naval battles. Other

countries such as France had not made this adjustment and continued to

suffer from scurvy.

Another interesting twist: Around 1800 the potato came into much wider use in the diet of people in Ireland and Great Britain: Potatoes have only a small amount of Vitamin C, but this can be enough to sustain the body's needs, so again, scurvy began to drop away as a condition. (One of the consequences of the great potato blight in Ireland was not only famine, but also scurvy.)

Scurvy was also a danger on the arctic explorations in the late 19th

and early 20th century, and here is a picture of some men in 1875 getting their lime juice rations:

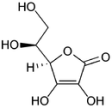

Casimir Funk in 1912 worked on the idea of vitamins, noting their amine base, and

the need small amounts of these substances---he was originally writing

about thiamine (the suffix -amine tends to refer to a chemical

with an active nitrogen-hydrogen component), as will be discussed

further on in the section on beriberi. But Funk also presciently

suggested that a lack of these substances might well be at the root of

such conditions as beriberi, scurvy, pellagra, and rickets!

Interestingly, it wasn’t until the late 1920s and 1930s that ascorbic acid was identified

Albert Szent-Györgyi (his 1948 photo on the right) was awarded the 1937 Nobel Prize in Medicine "for his discoveries in connection with the biological combustion processes, with special reference to vitamin C and the catalysis of fumaric acid". He also identified many components and reactions of the citric acid cycle independently from Hans Adolf Krebs.

of mind and body that comes from a reduced metabolism. Iodine is a

common element in seawater and fish, but in inland areas, the iodine

tends to have been washed away with the millennia of rain and rivers,

so areas such as Switzerland in Europe or Michigan in the United States

have little iodine in the soil or the growing foods. Before iodine

fortification of salt and food, people in such regions (and even today

in many other areas of the world that don't have iodine

supplementation), people would get large lumps in their neck (which

comes from an overgrowth of the thyroid gland---trying to make more

thyroid, even in the face of the lack of a key building block---iodine,

much as I described above how in iron deficiency the bone marrow also

expands and distorts its surroundings). The overgrowth looks like a

lump in the neck that's called "goiter" (two examples in photos on the left).

Goiter is disfiguring, but what's even worse is a condition that happens to the babies of mothers with not enough thyroid. They have a characteristic appearance that comes from a growth delay. If it is not corrected, they grow up stunted in height and also mentally retarded! This condition is

known as cretinism, and the children called cretins. If

the children are treated early with thryroid hormone and given adequate

iodine in their diets, as you may see in the picture on the left, some

or much of this mental retardation can be reversed and they change

their appearance! In most cases, goiter of short duration also can be

treated with iodine supplementation in the diet. If goiter is untreated

for around five years, however,

iodine supplementation or thyroxine treatment may not reduce the size

of the thyroid gland because the thyroid is permanently damaged.

Near the end of the 19th century the use of extracts of thyroid was found to help low thyroid conditions, and also biochemists noted that the thyroid gland was rich in iodine. The introduction of iodized salt since the around the 1920s has eliminated this condition in many affluent countries. However, in Australia, New Zealand, and several European countries, iodine deficiency is a significant public health problem. It is more common in poorer nations. While noting recent progress, the main medical journal in Great Britain, The Lancet, editorialized, "According to the World Health Organization (WHO), in 2007 nearly 2 billion individuals had insufficient iodine intake, a third being of school age. ... Thus iodine deficiency, as the single greatest preventable cause of mental retardation, is an important public-health problem." Another complicating factor comes from the way public health initiatives to lower the risk of cardiovascular disease have resulted in lower discretionary salt use at the table. Also, with a trend towards consuming more processed foods, the non-iodized salt used in these foods also means that people are less likely to obtain iodine from adding salt during cooking.

Finally, the thyroid was one of the earlier glands to have its function recognized, though that was only in the late 19th century. Most glands we know about---tear ducts, sweat glands, and even the mucus-secreting tissues of the nose or windpipe---are "exo-crine"---meaning they secrete to the outside (exo-). Claude Bernard (picture, right) in the mid-1800s began to explore the way some organs such as the liver or pancreas secrete inside the body (though such bile and digestive secretions are technically also exocrine. But he began to consider that some organs also secrete directly into the bloodstream---and that's where the endo-crine organs began to be understood---organs such as the thyroid, adrenals, and others.

This disease was noted in the 17th century, but became more common when

increasing numbers of people moved from the farm---where kids played

outdoors and got more sunlight---to the city, where a child might help

earn a living by working indoors. It was easy, also, to live so much in

the shadows in a smoggy city that again there's not much sunlight---and

sunlight stimulates the production of Vitamin D in the skin. So for

shut-ins or people in the northern climates who couldn’t get it

in milk, rickets became rather common. (The adult form of vitamin D

deficiency is osteomalacia, a word that hints at the main pathology:

The bones "osteo" become more soft ("-malacia) and other problems arise

from this! Also, a diet that includes milk also includes calcium, which

helps Vitamin D be absorbed; in turn, Vitamin D helps calcium be

absorbed! Vitamin D also helps regulate the levels and use of calcium,

but sadly many people get insufficient

doses of either.

Another problem is that girls tend to get pelvic deformities that then increase significantly the likelihood of complications of delivery, requiring the use of forceps or sometimes resulting in the death of the baby and mother. The frequency of such problems (secondary to rickets) increased the use of male physicians as obstetricians, and the decline of the role and influence of the midwife.

Vitamin D is present in significant amounts in cod liver oil (also Vitamin A). When I was a kid I was given cod-liver-oil by my mom. It was a fashionable supplement in the 1920s through the 1940s, before the Vitamins were synthesized and included in many fortified foods. Though it didn't taste good, cod liver oil was recommended for rickets treatment as early as 1789 by Thomas Percival in England, though this treatment wasn't widely recognized. Dickens’ London was prime territory for rickets and Tiny Tim may have been suffering from it.

In the American South, an interesting problem came up: Melanin in the skin blocks ultra-violet light, so African-Americans only absorb about a third compared with caucasians. So those who work indoors or in overcasts conditions were prone to rickets, and some doctors even came to think of it as a disease of slaves! Even as late as the 1950s more black women had obstetrical problems due to deformed pelvises due to low-grade rickets, and as late as 1977 a national study of black pre-schoolers found a very high incidence of at least sub-clinical rickets. Also, the full body clothing of some Muslim women in some cultures is also associated with increased rickets. In Ethiopia, full swaddling of babies again leads to higher incidence of rickets in children there. The problem with some northern animal livers is that they are so rich in Vitamins A and D that they can make you sick---it's called "Hyper-vitaminosis"---applicable only to the fat-soluble vitamins---and if you have a nice meal of polar bear liver it can kill you!

A little chronology: In 1915, McCollum, Davis & Kennedy descibed vitamins A and B, though sub-types were soon discerned in the following years.

In 1917, biochemists discovered that the key agent--the Vitamin D-- in

cod liver oil was the compound, cholesterin. In 1919 E. Mellanby began

trying it out as a cure for rickets in animals (created

experimentally). Around that time Huldschinsky demonstrated curative

effect of sunlight on rickets using a quartz lamp. On the right is a

photo of the use of the quartz lamp to proide artificial sunlight for

children in the prevention and treatment of rickets.

Around 1920 H. Steenbock in the USA developed and patented a food irradiation process using ultraviolet light, and found that common foods could protect many children against this horrible disease. By 1924, most Americans were consuming irradiated milk and bread, and within a few years rickets was nearly eradicated throughout the country. About that same time, a German scientist identified three different types of vitamin D, of which two were derived from irradiated plant sterols, and the third type from irradiated skin-this is the form we receive when we are exposed to sunshine. This information led scientists to develop a way to synthesize vitamin D, and since this was much more economical than irradiation, many food manufacturers began adding the vitamin to their products.

Thiamine is found in a variety of food, more with whole grains. With

rice, the vitamin is found in the pericarp or covering of the grain,

which gets rubbed off in the process of polishing. Brown rice doesn't

keep as well, it tends to get rancid, and the idea of polished white

rice has always been considered a refinement, eaten by the better sorts

of people. If there are enough other types of food this is not a

problem, but it's a bit like the problem described above with scurvy:

Sailors, soldiers, others on otherwise somewhat restricted diets, if

they don't get the whole rice, come down with a multi-system disease

called beriberi, which is found throughout Asia in a varied population.

It was common in some armies and also navies, because as with Europe,

common soldiers and sailors were given rather meager rations.

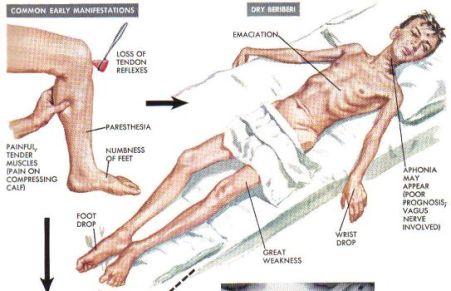

Beriberi was described by the Chinese around 2700 BC, but the cause was unknown. As seen on the chart to the left, it's a multi-system disease. The fellow at the right also probably suffers from starvation---some of the prisoners of the Japanese at the end of WW2 had this condition. But other people may not be starved of protein or calories, but still become sick.

the Dutch

East Indies in 1887, to work in Batavia, then the capital (on what is

now the

island of Java). Eijkman worked to find the source of infection but was

unsuccessful. One day Eijkman noted that some chickens who fed on the

garbage from

the hospital and the prison were moving in a jerky way that had some

similarities to the kind of nerve weaknesses associated with the

disease, and wondered if they might be suffering from the same germ.

Then for a few months they got better. Then they got worse again.

Following up on this, Eijkman discovered that the month before and

during their improvement, the food they were eating contained a good

deal of un-polished, brown rice. Their diet included the residue of

what was fed some prisoners at a nearby jail. Then that stopped and the

chickens returned to the garbage that included only polished rice.

Could there be something about the rice? Following this lead, the

doctor came to as sort-of-right sort-of-wrong answer: The rice, he

thought, might have a toxic agent, but it was counteracted by whatever

was in the brown husk. The point was that diet could be a source of

disease. He published his results of many experiments in 1897 in a

German journal, and for this Ejikman was later awarded the 1929 Nobel

Prize in

Medicine (in conjunction with the more accurate corrective work of

F. G. Hopkins, noted next.) As mentioned above, Funk followed up on

this in

1911, confirmed such findings in birds, and postulated substances

called vital

amines, later abbreviated to vitamins.

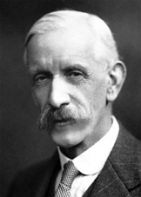

Hopkins demonstrated the need for thiamine in the diet in publications

done from 1906-1912, and was a co-recipient of the 1929 Nobel Prize in

Medicine for his following-up on and confirming the preliminary

findings of Eijkman. This biochemist made a number of contributions in

the field of nutrition, and as the awarding person at the Nobel Awards

said, [You} "...demonstrated the physiological necessity of the

vitamins for normal metabolism and growth, thus very considerably

extending our knowledge of the importance of vitamins for life

processes as a whole."

In 1926 two biochemists called Jansen and Donath isolated a tiny amount of a substance they called aneurin but there was too little to be of much value. In 1936 thiamine was finally identified and created synthetically. Commercial production did not start until 1937 but it achieved great importance in the 1950s with the demand for the fortification of food.

Interestingly, after 1950, the growth of rice polishing mills throughout the Pacific area led to far more beriberi. As I mentioned, hand husking and threshing wasn’t as efficient and saved the vitamins. It took a lot of politics and hustling to get the companies to cut back on their efficiency, leaving enough vitamin in. Others began to introduce some artificially enriched vitamin—in the USA it was in refined flour and then re-enriching it with thiamine as a chemical— and we remember companies advertising how their bread was enriched. But in fact, beriberi is still widespread due to the Oriental equivalent of junk food.

though it shows most clearly in what has been called the three "D"s: dermatitis, diarrhea, and dementia.

In 1902, a Georgian farmer complaining of weight loss, great blisters on his hands and arms, and melancholy every spring for the previous 15 years was recognized to be suffering from pellagra. Four years would elapse until it was diagnosed again, this time in an Alabama insane asylum, where the classic constellation of diarrhea, dermatitis, dementia and even death appeared in multiple inmates. Over the next five years, southern clinicians would increasingly diagnose pellagra among their poor and institutionalized populations. These people subsisted largely on a diet of corn and fat pork. The situation was most acute for those living in prisons, orphanages, and asylums. Eight states from 1907 through 1911 recorded 15,870 cases of pellagra with a 39% fatality rate. In South Carolina alone in 1912, there were 30,000 cases with 12,000 deaths. But this underestimated the problem, as only 1 in 6 people suffering from pellagra sought out a physician.

At that time, physicians believed that pellagra was caused by a germ of

some sort, perhaps a fungus on corn or a virus spread by flies. In

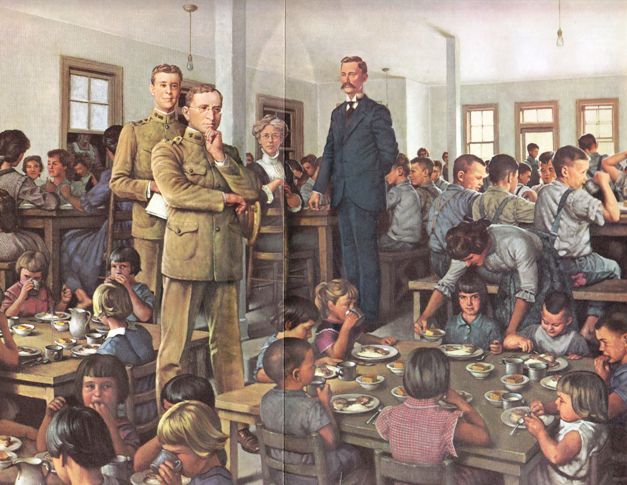

1914, the US Surgeon General sent Dr. Joseph Goldberger, a physician in the Public Health Service, south to

investigate the cause of pellagra. He was impressed by the monotony of

the diet eaten by the poorest Southerners, especially mill workers,

tenant farmers, and institutionalized persons.

Manipulating diet in experimental studies, he was able to both create and cure pellagra. Despite the denial of southern politicians that such malnutrition existed, in each of the years 1928, 1929, and 1930, the South suffered more than 200,000 cases of pellagra and 7,000 deaths. Like hookworm, this disease further weakened the southern workforce and stunted the physical and mental growth of children.

A major part of this story is that Goldberger sought for many years to promote better nutrition, a campaign that pit him against those who wanted to spend as little money as possible: Employers, of course; but also politicians whose careers to some extent depended on the contributions of their wealthier constituents.

Goldberger's prescription of a nutritious diet was beyond the means of many Southerners, but in the 1920s, researchers found that brewer's yeast could prevent the disease. After work in the 1930s showed nicotinic acid to be the precise defect in pellagra, flour producers began to enrich both white and corn flour with the newly identified vitamin. Such foods, coupled with rising prosperity after World War II, finally eradicated pellagra in the South.

Goldberger worked tirelessly in his laboratories. here shown with his assistant and in another laboratory that was in effect more of a kitchen!

Furthermore, the NIH found through its Womans Health Study of forty thousand women over ten years that those who took Vitamin E showed no statistical benefits compared to those who didn't when it came to the incidence of heart disease, and the "anti-oxidant" porperties of Vitamin C or beta-carotene also showed no effect when it came to heart disease. Physicians in a health study again showed no benefits from Vitamin C or Vitamin E, and in another 36,000 women, Vitamin D or selenium offered no protection against breast cancer.

The emergence of the recognition of the need for a more varied diet with a wider range of vitamins and minerals was only around 80 - 110 years ago. That is to say, while the first four lectures dealt with events generally occurring between the mid-1700s through around 1870, the lecture today will address the era known as fin-de-siecle—French for “end of the century”—referring to the end of the 19th and beginning of the 20th century; and next week we’ll talk about changes that began in the mid-19th but continue well into the present time.

Anyway, vitamins emerged during my parents’ youth and still were a big thing when I was growing up. Their being so special was because they were an emerging technology. Deficiency disease was still prevalent when they were growing up. They hadn’t yet started fortifying foods—a term that means adding synthetically produced vitamins D, some B vitamins, iodine in the salt, and so forth. So I want to re-state in our parents’ honors that we may join them in appreciating these developments and allowing ourselves to appreciate by learning a bit about how these changes came about, honoring the efforts of the pioneers.

The funny thing is that many of the pioneers were right for partly the wrong reasons, or were wrong for a while before they finally came up right, and that’s another point of these stories. When I was growing up discoverers, it seemed, knew where they were going and how to get there. Only later did I discover that, say, Columbus, was largely mistaken about a number of what he thought he had discovered and it wasn’t that at all, plus his management of his team and the indigenous tribes he encountered was, let’s just say, poor.

Complicating this is that people often suffered from multiple dietary deficiencies, perhaps co-morbid with other chronic infections such as hookworm disease, malaria, and so forth. The term “co-morbid” is worth knowing, meaning simply that a person can come into the emergency room with both a broken arm and a burn.

Another disorder of malnutrition came with some of the elderly patients who drifted into a tea-and-toast type diet, emphasizing easy-to-prepare foods, and as a result developing a variety of nutritional deficiency diseases. I suspect this to be happening even today!

In the last part of the first aphorism of Hippocrates, the father of medicine, I interpret this as a call on the physician to be active in public health, and ultimately in politics. One might even wonder about the boundary with religion—there are questions about the basic question of ... but is it good for health?.. in a number of religious traditions. It’s the politics—not just the politics of getting an idea accepted within the profession, which we have talked about—but getting an idea accepted by the politicians in the service of changing the social system. A major part of this is just getting the haves in control to arrange to share a bit with the have-nots out of power, and I’m not talking about small changes, nor class warfare, whatever that means—I may do a series on the realities of class in modern society, and how folks try to pretend that it’s not there---- but the truth is that in many parts of the world, folks are sick and dying and starving to death. So can physicians rightly try to make externals cooperate? This is the boundary of public health that well weave in both today and next time.

sss

This is the fifth in a series of presentations given to the February-March, 2009 session of our local lifelong learning program, Senior University Georgetown.)

Other lectures in this series include: 1. Introduction and Germ Theory, plus supplementary webpages on a brief overview of the history of medicine before 1500,

a brief overview of the history of medicine after 1500, and the history of microscopy.

2: Contagion, Infection, Antisepsis ; 3: The Early History of Immunology ; 4. The Discovery of Anesthesia. .. 5. This webpage (title above), and:

6: Hygiene: Cholera, Hookworm & Sanitation. 6a. Dental Hygiene and Plaque Control (Flossing)

Today we'll address the history of nutritional deficiencies. A theme here is the opening of previously unrecognized horizons. People didn't know there were subtle substances in food. We came to realize that there are stars beyond what we can see with the naked eye and forms of life too small, and similarly, there were many other subtleties that await technological enhancements to appreciate. In the field of nutrition, this involves the continuing development of equipment for testing or assaying tiny amounts of chemicals.

Many of the breakthroughs in medicine were made not by physicians, but by chemists and biochemists. (Biochemistry as a field emerged only in the early 1800s when it became apparent that the chemistry of living matter was more complicated and based mainly on the properties of the carbon atom.) Before that, though, what some pioneers did was more a job for Sherlock Holmes, a process of deduction, experiment, but not fully understanding why or how it works.

The topics to be addressed today include basic protein deficiency in starvation; iron deficiency anemia; scruvy; iodine-deficiency and subsequent low thyroid functin; rickets from vitamin D deficiency; beriberi from Vitamin B1 deficiency; and pellagra from niacin deficiency---and some of the stories of the discovery of their existence. It should be noted thought that new subtle nutrients are still being discovered, or research is revealing that more or less of this or that is optimal. For example, only about 17 years ago evidence accumulated that mothers need increased folic acid to reduce the incidence of certain birth defects such as spina bifida. Now it's being added to foods!

Protein Deficiency

|

|

|

One cause of kwashiorkor is of course simple famine, one of the four horsemen of the apocalypse. Let's note a point here, though: A person can become malnourished even if they are getting enough general foodstuffs in the form of simple calories. This is because there are three main categories of food: carbohydrates, fats and proteins. Carbohdrates are mainly used for basic calories, for fuel for the cells to live. Fats are used also mainly for fuel, but also some of its components are important as building blocks; Proteins are broken down into amino acids that are mainly used for building blocks in growing new tissues or replacing old cells. We need all three types.

It's possible to have a diet that is made up mainly of calories, interestingly enough. The second is used both for fuel , the fuel and the building blocks. We need both. In the picture of the baby on the right, it was probably fed on corn mush or what some might call "empty calories." They're not empty in the sense of providing nutrition as fuel, but it doesn't adequately provide the variety of proteins and other nutrients to keep up health. This baby also has kwashiorkor, even though she isn't starving. Sadly, there are babies in poor families (and even some pampered babies in rich families who get fine sweets!) who don't get a truly balanced diet and get sick as a result!

Thus, being a little fat in cheeks and elsewhere isn't a reliable guide. This baby may have been fed mainly on corn mush. The raw creases at the edges of the mouth is called cheilosis. Other signs include the reddish hair and empty vision. Protein deficiency, kwashiorkor, leads to mild mental retardation, because the brain lacks the nutrients to work with. Thus part of prevention is education of parents about good nutrition, and some teen parents aren't bothering to learn what they need to about child care---their babies are thus a population at risk. Indeed, one of the points of this and the next lecture is an appreciation of the nature of public health as a preventive force in society.

Iron Deficiency

The picture on the left shows native Indian slaves in 17th century Florida planting and hoeing. (Yes, the Spanish Conquistadores enslaved the aboriginal population! They felt entitled to do so because they were possessors of truth and goodness and the Indians were heathens who, by not being baptized, didn't merit the privileges of full humanity---i.e., it was okay to make them into slaves.) Whereas the Indians ate a varied diet based on a hunter-gatherer cultural practice, as slaves their diet became mainly maize corn, which lacks a number of nutrients---iron being one of them.

As a result, children struggle with chronic iron deficiency. Iron is needed to build blood cells, and if there isn't enough in the diet, the body works overtime. The bone marrow where blood cells are manufactured expands. For example, while the skull doesn't usually have much space for marrow between the outer and inner external plates, in chronic anemia this space expands and the bone becomes strangely porous---a condition known as porotic hyperostosis (bony overgrowth). To the right is a picture of the skull of one of the children of those 17th century Florida Indians, indicating that chronic iron-deficiency anemia had become common.

Iron and Hemoglobin

In anemia, the tissues aren't getting enough oxygen and the person feels bad, tired, and exertion quickly becomes exhausting. Not understanding that slaves and other poorly nourished people really were suffering with a handicap of low blood hemoglobin, their seeming sluggishness was interpreted as laziness, "shiftlessness," and proof that they were unworth of respect. This reinforced the illusions of righteousness of the class of people who exploited their labor. This dynamic still goes on in many parts of the world today.

With anemia, the problem is fatigue. Since no one listened to complaints, the fatigue was interpreted as what? Right, laziness. That proves these people are unworthy, not energetic, and therefore unworthy of moral respect. It’s right that they be treated as less than full persons. No-account, lazy, worthless, blame-worthy—bad, not sick.

Treatment

Here and there in the history of medicine there were physicians who prescribed something containing iron. |

Gradually, though, mainly near the beginning of the 20th century, when a variety of biochemists were exploring all sorts of nutrients, the value of iron became more established as a remedy for anemia. This was found to be fairly common, in fact. Some girls in the 19th century were diagnosed with "chlorosis"---a condition that has not been diagnosed for over eighty years! It is based on the greenish color of chlorine---related also to the green of the biological enzyme chlorophyl (remember those green gums in the 1950s?) . In retrospect, this condition may have been a mixture of psychosomatic symptoms, some anorexia, anemia due to menstruation without a compensatory diet, tight clothing, and so forth. Some of these symptoms may have been overlooked also because bleeding was still a common treatment, and being somewhat anemic was even a little fashionable.

Scurvy: Vitamin C Deficiency

Another condition described centuries ago and not correctly

understood was the disease called scurvy. It was prevalent among a

variety of groups of people who had been restricted to a narrow range

of food, generally not including fresh fruits and vegetables.

Prisoners, slaves, soldiers

|

Vitamin C is needed for connective tissues to form with some strength. So one sign is that the skin has little blue-red places where blood leaks out from fragile capillaries, or larger areas of subdermal (under-the-skin) bleeding that looks like a bad bruise. The gums become tender---there's just little resistence to the prevalence of gum disease (see the explanation of that in the next lecture-addendum).

Really, only small amounts of vitamin C are needed, and a deficiency takes months to appear, unless one has already been marginally malnourished. For soldiers and especially sailors on many month-long or longer expeditions, biscuits can give enough calories, but those in themselves are not sufficient. Gradually, they begin to tire easier, and have other symptoms. As with iron deficiency, their upper-class commanders just thought they were lazy. After six months, the deficiencies show: The body’s capacity to resist infection plummets. Folks die from secondary infection, and sometimes from internal bleeding, because Vitamin C helps the tissues be firm. Without it, tiny blood vessels break down and people start to get small bruises spontaneously, bruise easier, and periodontal disease—gums—also bleed and get infected. As teeth have problems, other types of malnutrition also occur.

I recently read a case study about an overly picky and obsessive child — a toddler, actually—who was permitted by his conflict-avoiding parents to restrict his diet. He'd tantrum if pushed to eat beyond his familiar range. As a result, this child developed a variety of symptoms for which he was medically evaluated thoroughly at some of the top hospitals in the area. They came up with a variety of rather rare diagnoses. But no one had taken a careful dietary history until finally someone did and recognized that scurvy should be included in the differential diagnosis. A trial of larger amounts of vitamin C began to reverse his symptoms, and this was then organized into a continuing recovery program that also included psychiatric treatment for his obsessions.

Another case I heard of is that of one of these silicon valley geeks who worked late hours and relied on those cheese and cracker snacks and coffee that you can get from the machines in the break room— and he, too, came down with early symptoms of scurvy—and was misdiagnosed for a time and finally correctly diagnosed. But a few hundred years ago they didn’t know about nutritional deficiencies at all.

James Lind (1716-1794)

This pioneer in the history of medicine was a ship's surgeon in the British Royal Navy for nine years (1739-48), then shifting over to work in the Royal Naval Hospital. Lind became interested in scurvy, did some research, considered that it might have to do with the diet, and tried a variety of different diets. The ones that included fresh citrus juices clearly had the best response. Lind acknowledged in his 1754 treatise that others had also come to think this way about fresh fruits, such as Sir Richard Hawkins 1593, Commodore James Lancaster in 1636 and others. I'm not sure if Lind |

At any rate, Lind did a number of experiments on sailors with scurvy, trying different diets:

|

Another interesting twist: Around 1800 the potato came into much wider use in the diet of people in Ireland and Great Britain: Potatoes have only a small amount of Vitamin C, but this can be enough to sustain the body's needs, so again, scurvy began to drop away as a condition. (One of the consequences of the great potato blight in Ireland was not only famine, but also scurvy.)

Scurvy was also a danger on the arctic explorations in the late 19th

Casimir Funk in 1912 worked on the idea of vitamins, noting their amine base, and

|

|

|

|

Albert Szent-Györgyi (his 1948 photo on the right) was awarded the 1937 Nobel Prize in Medicine "for his discoveries in connection with the biological combustion processes, with special reference to vitamin C and the catalysis of fumaric acid". He also identified many components and reactions of the citric acid cycle independently from Hans Adolf Krebs.

Iodine deficiency and Thyroid Function

Another previously unappreciated mineral deficiency involves the element of iodine. This lack makes it difficult for the thyroid gland to manufacture enough thyroxin, a horomone that helps in the process of basic metabolism, the chemical processes that maintain life. Too little thyroxine and the person develops hypothyroidism or myxedema, a sluggishness |

|

|

|

Goiter is disfiguring, but what's even worse is a condition that happens to the babies of mothers with not enough thyroid. They have a characteristic appearance that comes from a growth delay. If it is not corrected, they grow up stunted in height and also mentally retarded! This condition is

Near the end of the 19th century the use of extracts of thyroid was found to help low thyroid conditions, and also biochemists noted that the thyroid gland was rich in iodine. The introduction of iodized salt since the around the 1920s has eliminated this condition in many affluent countries. However, in Australia, New Zealand, and several European countries, iodine deficiency is a significant public health problem. It is more common in poorer nations. While noting recent progress, the main medical journal in Great Britain, The Lancet, editorialized, "According to the World Health Organization (WHO), in 2007 nearly 2 billion individuals had insufficient iodine intake, a third being of school age. ... Thus iodine deficiency, as the single greatest preventable cause of mental retardation, is an important public-health problem." Another complicating factor comes from the way public health initiatives to lower the risk of cardiovascular disease have resulted in lower discretionary salt use at the table. Also, with a trend towards consuming more processed foods, the non-iodized salt used in these foods also means that people are less likely to obtain iodine from adding salt during cooking.

|

Finally, the thyroid was one of the earlier glands to have its function recognized, though that was only in the late 19th century. Most glands we know about---tear ducts, sweat glands, and even the mucus-secreting tissues of the nose or windpipe---are "exo-crine"---meaning they secrete to the outside (exo-). Claude Bernard (picture, right) in the mid-1800s began to explore the way some organs such as the liver or pancreas secrete inside the body (though such bile and digestive secretions are technically also exocrine. But he began to consider that some organs also secrete directly into the bloodstream---and that's where the endo-crine organs began to be understood---organs such as the thyroid, adrenals, and others.

Rickets: A Disease Arising from a Deficiency of Vitamin D

Another problem is that girls tend to get pelvic deformities that then increase significantly the likelihood of complications of delivery, requiring the use of forceps or sometimes resulting in the death of the baby and mother. The frequency of such problems (secondary to rickets) increased the use of male physicians as obstetricians, and the decline of the role and influence of the midwife.

Vitamin D is present in significant amounts in cod liver oil (also Vitamin A). When I was a kid I was given cod-liver-oil by my mom. It was a fashionable supplement in the 1920s through the 1940s, before the Vitamins were synthesized and included in many fortified foods. Though it didn't taste good, cod liver oil was recommended for rickets treatment as early as 1789 by Thomas Percival in England, though this treatment wasn't widely recognized. Dickens’ London was prime territory for rickets and Tiny Tim may have been suffering from it.

In the American South, an interesting problem came up: Melanin in the skin blocks ultra-violet light, so African-Americans only absorb about a third compared with caucasians. So those who work indoors or in overcasts conditions were prone to rickets, and some doctors even came to think of it as a disease of slaves! Even as late as the 1950s more black women had obstetrical problems due to deformed pelvises due to low-grade rickets, and as late as 1977 a national study of black pre-schoolers found a very high incidence of at least sub-clinical rickets. Also, the full body clothing of some Muslim women in some cultures is also associated with increased rickets. In Ethiopia, full swaddling of babies again leads to higher incidence of rickets in children there. The problem with some northern animal livers is that they are so rich in Vitamins A and D that they can make you sick---it's called "Hyper-vitaminosis"---applicable only to the fat-soluble vitamins---and if you have a nice meal of polar bear liver it can kill you!

A little chronology: In 1915, McCollum, Davis & Kennedy descibed vitamins A and B, though sub-types were soon discerned in the following years.

Around 1920 H. Steenbock in the USA developed and patented a food irradiation process using ultraviolet light, and found that common foods could protect many children against this horrible disease. By 1924, most Americans were consuming irradiated milk and bread, and within a few years rickets was nearly eradicated throughout the country. About that same time, a German scientist identified three different types of vitamin D, of which two were derived from irradiated plant sterols, and the third type from irradiated skin-this is the form we receive when we are exposed to sunshine. This information led scientists to develop a way to synthesize vitamin D, and since this was much more economical than irradiation, many food manufacturers began adding the vitamin to their products.

Beriberi: Vitamin B1 (Thiamine) Deficiency

|

Beriberi was described by the Chinese around 2700 BC, but the cause was unknown. As seen on the chart to the left, it's a multi-system disease. The fellow at the right also probably suffers from starvation---some of the prisoners of the Japanese at the end of WW2 had this condition. But other people may not be starved of protein or calories, but still become sick.

|

Takaki and the Japanese Navy

Beriberi was not uncommon on long naval voyages and was thought to have much in common with scurvy. As Lind did a more than a century earlier regarding scurvy, so too there was a similar controlled trial in the early 1880s in the Japanese navy. A Japanese medical officer, Kanehiro Takaki, arranged that 2 ships left Japan on similar voyages, but with different diets. The first ship served the usual fare of rice, with some vegetables and fish. The second also served the crew wheat and milk, in addition to more meat than was served on the first ship. The results were impressive with 25 deaths from beriberi on the first ship and none on the other. The Japanese Admiralty adopted the new diet for the entire navy. The problem is that the results were published in a language few people could read, and so the dissemination of knowledge was feeble---another point of this lecture series. Even today good work is not being adequately disseminated. The internet, search enginge, and more tools for translation may make this easier, but progress needs to continue. (A note: Although the Japanese became "the enemy" in the 1940s and propaganda discounted the ability of this people, it should be noted that in the late 19th and early 20th century, there were a number of scientists who traveled internationally and made significant contributions in a variety of fields!)Christiaan Eijkman

Physicians and tropical medical specialists were wondering if this was another as yet-unrecognized type of infection, and that's what they were looking for when they sent Dr. Christiaan Eijkman, who had been trained in Robert Koch's laboratory in Germany in the new field of bacteriology (see previous and next lecture about Koch), to |

Frederick G. Hopkins

|

In 1926 two biochemists called Jansen and Donath isolated a tiny amount of a substance they called aneurin but there was too little to be of much value. In 1936 thiamine was finally identified and created synthetically. Commercial production did not start until 1937 but it achieved great importance in the 1950s with the demand for the fortification of food.

Interestingly, after 1950, the growth of rice polishing mills throughout the Pacific area led to far more beriberi. As I mentioned, hand husking and threshing wasn’t as efficient and saved the vitamins. It took a lot of politics and hustling to get the companies to cut back on their efficiency, leaving enough vitamin in. Others began to introduce some artificially enriched vitamin—in the USA it was in refined flour and then re-enriching it with thiamine as a chemical— and we remember companies advertising how their bread was enriched. But in fact, beriberi is still widespread due to the Oriental equivalent of junk food.

Pellagra: Vitamin B3 (Niacin) Deficiency

Although described in Italy in 1735, pellagra was not recognized in the United States until the early 20th century. This disorder, like beriberi, affects many systems in the body,In 1902, a Georgian farmer complaining of weight loss, great blisters on his hands and arms, and melancholy every spring for the previous 15 years was recognized to be suffering from pellagra. Four years would elapse until it was diagnosed again, this time in an Alabama insane asylum, where the classic constellation of diarrhea, dermatitis, dementia and even death appeared in multiple inmates. Over the next five years, southern clinicians would increasingly diagnose pellagra among their poor and institutionalized populations. These people subsisted largely on a diet of corn and fat pork. The situation was most acute for those living in prisons, orphanages, and asylums. Eight states from 1907 through 1911 recorded 15,870 cases of pellagra with a 39% fatality rate. In South Carolina alone in 1912, there were 30,000 cases with 12,000 deaths. But this underestimated the problem, as only 1 in 6 people suffering from pellagra sought out a physician.

Joseph Goldberger

|

Manipulating diet in experimental studies, he was able to both create and cure pellagra. Despite the denial of southern politicians that such malnutrition existed, in each of the years 1928, 1929, and 1930, the South suffered more than 200,000 cases of pellagra and 7,000 deaths. Like hookworm, this disease further weakened the southern workforce and stunted the physical and mental growth of children.

A major part of this story is that Goldberger sought for many years to promote better nutrition, a campaign that pit him against those who wanted to spend as little money as possible: Employers, of course; but also politicians whose careers to some extent depended on the contributions of their wealthier constituents.

Goldberger's prescription of a nutritious diet was beyond the means of many Southerners, but in the 1920s, researchers found that brewer's yeast could prevent the disease. After work in the 1930s showed nicotinic acid to be the precise defect in pellagra, flour producers began to enrich both white and corn flour with the newly identified vitamin. Such foods, coupled with rising prosperity after World War II, finally eradicated pellagra in the South.

|

|

Goldberger worked tirelessly in his laboratories. here shown with his assistant and in another laboratory that was in effect more of a kitchen!

Summary

It should be noted that just because it’s a vitamin doesn't mean that it's a panacea---another word for cure-all. The National Institute for Health (NIH) has coordinated a variety of studies into the health benefits of various vitamins. In a recent news article in the Austin-American Statesman newspaper (12/25/08, page A-13 ) a number of trials of alternative health approaches have been done by a variety of organizations. The claim by alternative health folks that the “establishment” isn’t doing anything is clearly not true—there are many groups trying to explore plausible hypotheses. But just because there are claims it doesn’t mean they are true. For example, in two long term trials involving more than 50,000 people, Vitamin C, Vitamin E, and/or selenium supplements did NOT reduce risk of cancer of the prostate, bladder, or pancreas. And in other studies, over-the-counter vitamins were of no help in preventing stroke or cardiovasc disease. As mentioned above, certain vitamins—especially the fat-soluble ones, A and D, in high doses, accumulate in fatty tissues and may in themselves be toxic and cause "hyper-vitaminosis" diseases. It's also possible to ingest too much of certain minerals. Yet 64 % of Americans take vitamin supplements, and sales in the last twelve years have doubled. Other research: Vitamin A in the form of beta carotene, did not help and may even add to risk of cardiovascular disease. Eighteen thousand smokers or people with asbestos in their lungs had an incidence of cancer that was 28% higher among those who took beta carotene and/or Vit A. (Perhaps beta carotene curbs the body’s ability to metabolize or break down excessive Vitamin A.)Furthermore, the NIH found through its Womans Health Study of forty thousand women over ten years that those who took Vitamin E showed no statistical benefits compared to those who didn't when it came to the incidence of heart disease, and the "anti-oxidant" porperties of Vitamin C or beta-carotene also showed no effect when it came to heart disease. Physicians in a health study again showed no benefits from Vitamin C or Vitamin E, and in another 36,000 women, Vitamin D or selenium offered no protection against breast cancer.

The emergence of the recognition of the need for a more varied diet with a wider range of vitamins and minerals was only around 80 - 110 years ago. That is to say, while the first four lectures dealt with events generally occurring between the mid-1700s through around 1870, the lecture today will address the era known as fin-de-siecle—French for “end of the century”—referring to the end of the 19th and beginning of the 20th century; and next week we’ll talk about changes that began in the mid-19th but continue well into the present time.

Anyway, vitamins emerged during my parents’ youth and still were a big thing when I was growing up. Their being so special was because they were an emerging technology. Deficiency disease was still prevalent when they were growing up. They hadn’t yet started fortifying foods—a term that means adding synthetically produced vitamins D, some B vitamins, iodine in the salt, and so forth. So I want to re-state in our parents’ honors that we may join them in appreciating these developments and allowing ourselves to appreciate by learning a bit about how these changes came about, honoring the efforts of the pioneers.

The funny thing is that many of the pioneers were right for partly the wrong reasons, or were wrong for a while before they finally came up right, and that’s another point of these stories. When I was growing up discoverers, it seemed, knew where they were going and how to get there. Only later did I discover that, say, Columbus, was largely mistaken about a number of what he thought he had discovered and it wasn’t that at all, plus his management of his team and the indigenous tribes he encountered was, let’s just say, poor.

Complicating this is that people often suffered from multiple dietary deficiencies, perhaps co-morbid with other chronic infections such as hookworm disease, malaria, and so forth. The term “co-morbid” is worth knowing, meaning simply that a person can come into the emergency room with both a broken arm and a burn.

Another disorder of malnutrition came with some of the elderly patients who drifted into a tea-and-toast type diet, emphasizing easy-to-prepare foods, and as a result developing a variety of nutritional deficiency diseases. I suspect this to be happening even today!

In the last part of the first aphorism of Hippocrates, the father of medicine, I interpret this as a call on the physician to be active in public health, and ultimately in politics. One might even wonder about the boundary with religion—there are questions about the basic question of ... but is it good for health?.. in a number of religious traditions. It’s the politics—not just the politics of getting an idea accepted within the profession, which we have talked about—but getting an idea accepted by the politicians in the service of changing the social system. A major part of this is just getting the haves in control to arrange to share a bit with the have-nots out of power, and I’m not talking about small changes, nor class warfare, whatever that means—I may do a series on the realities of class in modern society, and how folks try to pretend that it’s not there---- but the truth is that in many parts of the world, folks are sick and dying and starving to death. So can physicians rightly try to make externals cooperate? This is the boundary of public health that well weave in both today and next time.

References

Frankenburg, Frances R. (2009). Vitamin discoverieds and disasters: History, science & controversies. Santa Barbara, CA: Praeger / ABC-CLIO.sss